30 Jan Artificial Intelligence Act of European Commission

This article covers ‘Daily Current Affairs’ and the topic details of “AI Act of European Commission”. This topic is relevant in the “Science & Technology” section of the UPSC CSE exam.

Why in the News?

In response to fears of excessive regulation on Artificial Intelligence (AI) in Europe, the European Commission has introduced a set of rules aimed at fostering AI innovation. Following the political agreement reached in December 2023 on the EU AI Act, which is the first-ever comprehensive law on AI globally. The European Commission aims to encourage the development, deployment, and use of trustworthy AI within the European Union (EU).

About Europe’s AI Innovation Plan

- The European Commission has initiated a comprehensive plan to support startups and small businesses in Europe for the development of trustworthy AI. Key components of the plan include acquiring, upgrading, and operating AI-dedicated supercomputers to facilitate fast machine learning and the training of large general-purpose AI (GPAI) models.

- GPAI models are versatile AI systems capable of performing a wide range of tasks with minimal modification, and are at the forefront of the plan. The initiative aims to broaden the use of AI to include public and private users, including startups and SMEs.

- The plan also focuses on supporting the AI startup and research ecosystem by assisting in algorithmic development, testing, evaluation, and validation of large-scale AI models. The goal is to enable the creation of diverse emerging AI applications based on GPAI models.

Why is the EU putting emphasis on AI Innovation off lately?

- Challenges of Over Regulation: Europe’s focus on AI innovation stems from concerns about overregulating AI and lagging behind American companies like OpenAI and Google in terms of visible AI innovation. The EU has faced accusations of preemptively regulating AI before its widespread adoption across the continent.

- AI Act Criticism: The AI Act implemented in December 2023 has faced criticism for its rules governing AI use within the EU.

- While it provides clear guidelines for law enforcement agencies, it also imposes strict penalties on companies violating the regulations.

- The Act restricts facial recognition technology and the use of AI to control behavior.

- It allows the government real-time biometric surveillance in public areas only under specific serious threats.

About European Union’s Artificial Intelligence Act

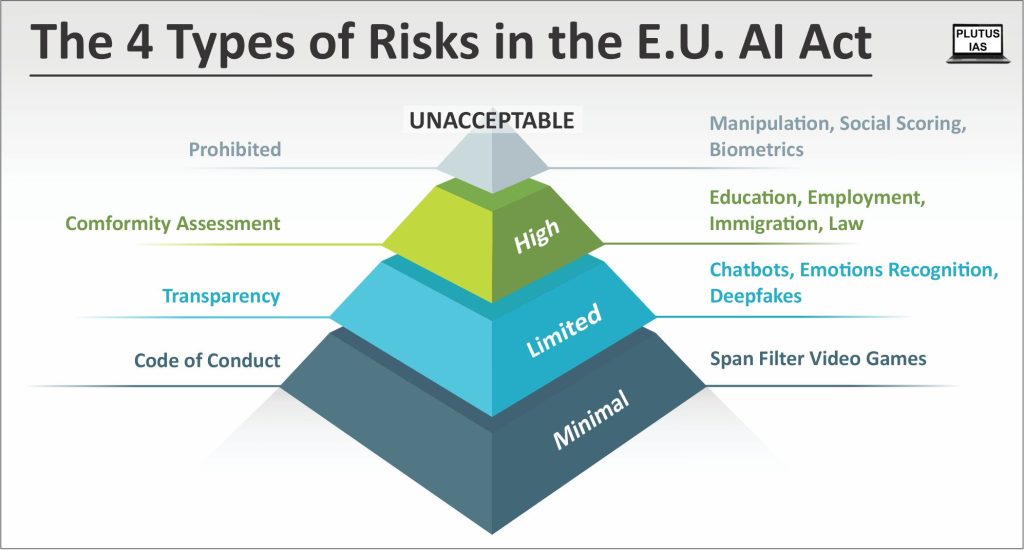

- Risk Classification System:In a groundbreaking move, the AI Act proposes a risk-based regulatory system, categorizing AI systems into unacceptable risk, high risk, and low or minimal risk segments.

- Unacceptable Risk: AI systems posing a threat to people, like cognitive manipulation and biometric identification, are banned.

- High Risk: Systems operating critical infrastructure or impacting fundamental rights are labeled as high risk. Compliance requirements and obligations for providers are outlined in the Act.

- Risk Management for High Risk: The Act outlines risk management efforts for high-risk systems, including documentation, transparency, and human oversight.

- Low or Minimal Risk: AI systems must meet minimum transparency standards, enabling users to make informed judgements.

- Generative AI Softwares need to comply: Specifically targeting generative AI software like ChatGPT, the AI Act mandates compliance with transparency requirements. These include disclosing the AI origin of content, implementing safeguards against illegal content generation, and publishing summaries of copyrighted data used in the model’s training.

Comparison of EU’s Plan with India’s Approach

- Similarities with India’s Strategy: The EU’s plan shares similarities with India’s approach to AI innovation. India is also striving to develop its own sovereign AI, build computational capacity, and offer compute-as-a-service to startups.

- India’s Capacity Building programmes: India aims to build a compute capacity of 10,000-30,000 GPUs through a public-private partnership model and an additional 1,000-2,000 GPUs through the PSU Centre for Development of Advanced Computing (C-DAC). The government is exploring incentive structures, including a capital expenditure subsidy model and a usage fee, to encourage private companies to establish computing centers.

- Digital Public Infrastructure (DPI): Similar to the EU’s plan, India aims to create a digital public infrastructure (DPI) using the GPU assembly, allowing startups to utilize computational capacity at a reduced cost. This innovative approach eliminates the need for startups to invest in expensive GPUs, reducing a significant operational cost.

Download plutus ias current affairs eng med 30th Jan 2024

Prelims practice questions

Q1) Consider the following statements:

1) Big Data in AI supplies the large datasets used for training AI models

2) Machine learning is a subfield of AI focuses on creating systems that can independently make decisions without explicit programming

3) Natural language Processing(NLP) is the programming languages for AI

How many of the above statements are correct?

a) One

b) Two

c) Three

d) None

ANSWER: B

Q2) The concept of “singularity” in AI means which one of the following?

a) A point in the future where AI surpasses human intelligence

b) A programming language for AI

c) The process of creating sentient machines

d) A type of neural network architecture

Answer: A

Mains practice question

Q1) How has artificial intelligence (AI) influenced and transformed the field of healthcare? Provide specific examples of AI applications and their impact on patient care, diagnosis, and medical research.

Q2) Discuss the ethical considerations and potential risks associated with the use of artificial intelligence in decision-making processes, particularly in areas such as criminal justice, finance, and hiring practices.

I am a content developer and have done my Post Graduation in Political Science. I have given 2 UPSC mains, 1 IB ACIO interview and have cleared UGC NET JRF too.

No Comments