31 Jan Neuromorphic Computing

Neuromorphic Computing

Artificial Synapse for Brain-Like Computing or Neuromorphic Computing was recently developed by a team of scientists at the Jawaharlal Nehru Centre for Advanced Scientific Research (JNCASR). They have created brain-like computing by utilizing scandium nitride (ScN), a semiconducting semiconductor with exceptional stability and CMOS compatibility. This article is related to daily current affairs for the UPSC examination.

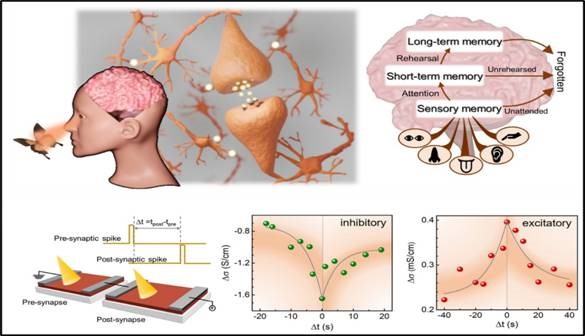

About Neuromorphic Computing

- The term “Neuromorphic Computing” was first used in the 1980s and refers to a type of computing that is inspired by the human brain and the nervous system.

- It describes the process of creating computer systems that are modeled after the neurological and endocrine systems of humans.

- Without requiring much space for the software to be installed, The devices of the neuromorphic computing system can function as effectively as the human brain.

- The development of the Artificial Neural Network model is one of the technological developments that has reignited scientists’ interest in Neuromorphic Computing systems (ANN).

Working Principle

- Artificial Neural Networks (ANN), which are composed of millions of synthetic neurons and are modeled after the brain’s neurons, are used to power neuromorphic computing.

- Using the Spiking Neural Networks’ architecture, these neurons communicate with one another in layers to transform input into output via electric spikes or signals (SNN).

- This enables the machine to accurately simulate the neuro-biological networks in the human brain and carry out tasks like sight recognition and data interpretation quickly and effectively.

Characteristics

The characteristics of neuromorphic computers are as follows:

- Memory and processing are combined. Instead of having distinct sections for each, the neuromorphic computer chips, inspired by the human brain, process, and store data jointly on each neuron. Neural net computers and other neuromorphic processors overcome the von Neumann bottleneck and can simultaneously have great performance and low energy consumption by collocating processing and memory.

- Highly parallel. Up to one million neurons can be found on neuromorphic semiconductors like Intel Lab’s Loihi 2. Each neuron performs multiple simultaneous tasks. Theoretically, this enables these computers to carry out as many tasks concurrently as there are neurons. The seemingly random firing of neurons in the brain, known as stochastic noise, is what this kind of parallel processing imitates.

- Compared to conventional computers, neuromorphic computers are more suited to processing this stochastic noise.

- Naturally scalable Normal scaling barriers do not exist for these computers. Users add more neuromorphic chips, increasing the number of active neurons, to run larger networks.

- Computation is driven by events. When other neurons spike, individual neurons, and synapses compute. This indicates that just a tiny subset of neurons are actively processing spikes; the rest of the computer is inactive. Power usage is incredibly efficient in this way.

- High levels of plasticity and adaptability. These computers are made to be adaptable to shifting stimuli from the outside world, just like human beings. Each synapse is given a voltage output in the spiking neural network architecture or SNN, and this output is adjusted depending on its task.

- Different connections are intended to evolve as a result of potential synaptic delays and a neuron’s voltage threshold in SNNs. Researchers anticipate that neuromorphic computers with higher flexibility would learn, solve novel challenges, and quickly adapt to new situations.

- Tolerance of faults: The fault tolerance of neuromorphic computers is very high. Similar to the human brain, a computer can continue to work even if one of its components fails since information is stored in several places.

- List of qualities of neuromorphic computers.

- The properties of neuromorphic computers set them apart from conventional computers in important ways.

Neuromorphic Computing

Challenges of Neuromorphic Computing System

- Numerous experts think neuromorphic computing can transform the strength, effectiveness, and capacities of algorithms in AI while also revealing new information about cognition. However, the neuromorphic computing process is still in its infancy and faces several difficulties.

- Accuracy: Compared to deep learning, machine learning, neural hardware, and edge graphics processing units, neuromorphic computers use less energy (GPUs). However, they have yet to demonstrate that they are indisputably more accurate than them. Many people prefer traditional software because of the accuracy issue, expensive expenses, and complexity of the technology.

- Limited algorithms and software: Software for the neuromorphic computing system is still behind the hardware. The majority of neuromorphic research is still carried out using von Neumann-developed techniques and common deep-learning tools.

- This restricts the research findings to conventional methods, which neuromorphic computing seeks to advance beyond. In a 2019 interview with Ubiquity, Katie Schuman, an assistant professor and researcher in the computing system, stated that the adoption of neuromorphic computing technologies “will require a paradigm shift in how we think about computing as a whole.” Though this is a challenging task, the future of computing innovation depends on our ability to go beyond our conventional von Neumann systems.

- Inaccessible: Nonexperts cannot access neuromorphic computers. To make neuromorphic computers more accessible, software developers have not yet produced application programming interfaces, programming models, or languages.

- Clear performance and common challenge challenges benchmarks are lacking in neuromorphic research.

Without these guidelines, it is challenging to evaluate the functionality of neuromorphic computers and demonstrate their efficacy.

- The known components of human cognition, which are still far from being understood, are the only ones that neuromorphic computers can access. For instance, numerous hypotheses, such as the Orch (OR) theory put forth by Sir Roger Penrose and Stuart Hameroff, contend that human cognition is based on quantum computation. Neuromorphic computers would be partial representations of the human brain and could need to combine technology from disciplines like probabilistic and quantum computing if cognition requires quantum computation as opposed to conventional computation.

Implications of the study

- The goal of neuromorphic computing technology is to resemble a biological synapse, which keeps track of and remembers the signal produced by stimuli.

- A device that controls the transmission of the signal and retains the signal is created using ScN.

- Meaning: This invention has the potential to be turned into an industrial product since it can offer a new material for stable, CMOS-compatible optoelectronic synaptic functionalities at a relatively lower energy cost.

- Memory storage and processor modules are physically separate on conventional computers. As a result, data transfer between these components throughout an operation consumes a great deal of power and time.

- Instead, a synapse (the junction between two neurons), which serves as both a processor and a memory storage unit, makes the human brain a superior biological computer that is both smaller and more effective.

- The brain-like computing technique can assist in meeting the rising computational needs in the contemporary era of artificial intelligence.

Significance Neuromorphic Computing

- Neuromorphic computing has paved the way for advancements in science and quick development in computer engineering.

- In the field of artificial intelligence, This computing process has been a ground-breaking idea.

- The neuromorphic computing process has increased information processing and made it possible for computers to work with the better and more advanced technology with the aid of one of the machine learning approaches used in artificial intelligence (AI).

Source:

Download the PDF now:

Plutus IAS current affairs 31st Jan 2023

Daily Current Affairs for UPSC

Plutus IAS provides a great opportunity to read the best daily current affairs for the UPSC examination free of cost. The aspirants also enhance their general knowledge of Politics, Economics, Geography, Social Science, Science and technology, and other aspects of knowledge by reading daily current affairs for the UPSC. Also, collect the weekly, and monthly current affairs for their IAS exam preparation.

No Comments